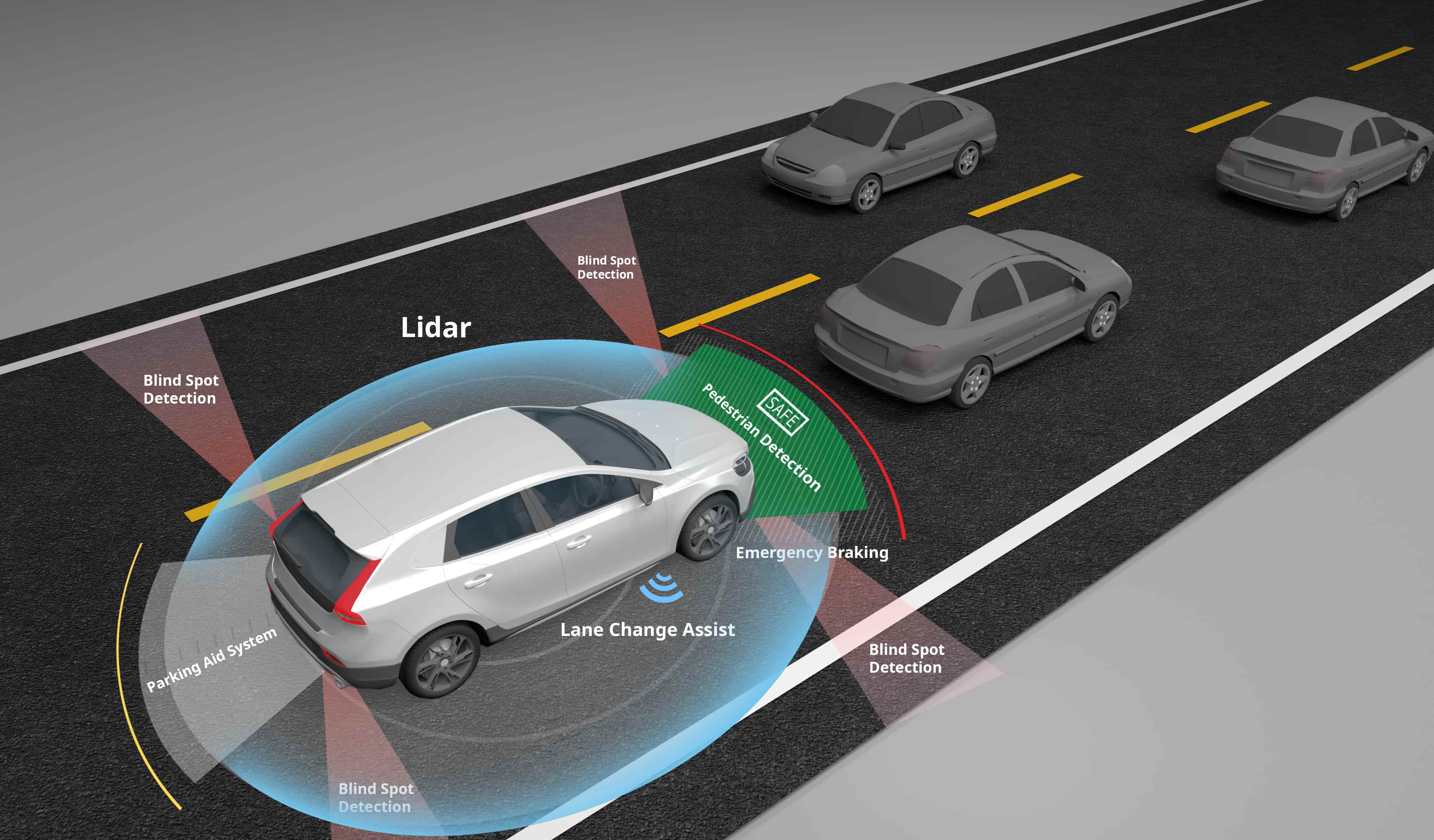

The gap between Level 2 driver assistance and true Level 4/5 autonomy is not just a matter of better algorithms—it is a matter of ground truth. For perception engineers and data scientists, the challenge has shifted from simply acquiring data to curating high-fidelity, sensor-fused datasets that survive the rigor of ISO 26262 functional safety standards.

In the high-stakes environment of autonomous driving, an error margin of centimeters can mean the difference between a safe stop and a catastrophic failure. This article dissects the technical requirements of multi-modal data annotation (LiDAR + Camera), the imperative of eliminating algorithmic bias through diversity, and the non-negotiable standards of enterprise data compliance.

The Technical Reality: Why Multi-Modal Annotation is Non-Negotiable

Reliance on a single sensor modality is a solved path to failure. Cameras excel at semantic interpretation (reading traffic signs, detecting brake lights) but struggle with depth perception and low-light conditions. LiDAR (Light Detection and Ranging) provides precise 3D spatial geometry but lacks color and texture information.

Sensor Fusion is the industry standard for robustness. However, annotating these datasets requires a sophisticated workflow that synchronizes 2D raster images with 3D point clouds.

1. Calibration and Synchronization

High-precision annotation begins before the first bounding box is drawn. It requires the precise alignment of extrinsic parameters (relative position/orientation of sensors) and intrinsic parameters (focal length, distortion).

The Challenge: A temporal offset of milliseconds between a camera frame and a LiDAR sweep can result in "ghost objects" during high-speed scenarios.

The Solution: Annotation platforms must support synchronized playback and projection, allowing annotators to verify that a cluster of points in the 3D space maps perfectly to the pixels in the 2D image.

2. 3D Point Cloud Segmentation vs. 2D Bounding Boxes

For enterprise-grade AV training, simple 2D bounding boxes are insufficient. The industry demands:

3D Cuboid Annotation: Defining length, width, height, and yaw/pitch/roll to calculate volume and trajectory.

Semantic Segmentation: Assigning a class label (e.g., drivable surface, vegetation, pedestrian) to every single point in the point cloud (Point-wise classification).

Lane Line & Polyline Annotation: Mapping complex geometries for HD Maps, including splines for curved roads and vectorization for intersection topology.

Mitigating Algorithmic Bias: The "Long Tail" of Data

A major bottleneck in current AV development is overfitting to specific operational design domains (ODDs). An AI model trained exclusively on the sunny, structured highways of Phoenix, Arizona, will fail predictably in the chaotic, snow-covered streets of Oslo or the high-density traffic of Mumbai.

To achieve generalizability, data procurement must prioritize Diversity to eliminate bias.

diverse Data Strategies for Robust Inference

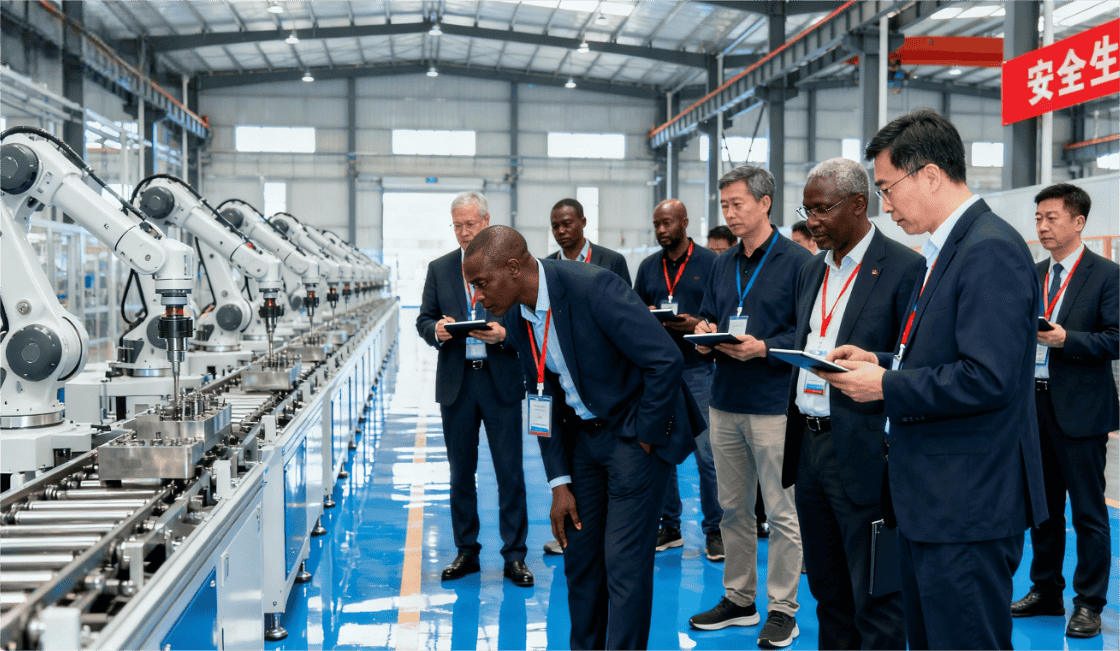

Geographic Variance: Sourcing data from different continents to capture varying road infrastructures, signage standards (Vienna Convention vs. MUTCD), and driving behaviors.

Edge Case Management: Deliberately curating "long-tail" scenarios—occlusions, lens flare, severe weather (rain/fog/snow noise in LiDAR), and erratic pedestrian behavior.

Demographic Representation: ensuring pedestrian detection algorithms perform equally well across all skin tones, clothing types, and mobility aids (wheelchairs, strollers), reducing the risk of biased false negatives.

Technical Note: High-quality annotation filters must distinguish between "true" obstacles and environmental noise (e.g., exhaust smoke or falling leaves) to prevent phantom braking events.

The Enterprise Shield: Security, Compliance, and GDPR

For OEMs and Tier 1 suppliers, data security is not an IT ticket; it is a boardroom concern. The handling of terabytes of visual data involving faces and license plates triggers stringent regulatory landscapes.

Compliance as a Service

When selecting a data annotation partner, the following certifications are the baseline for entry:

GDPR & CCPA Compliance: PII (Personally Identifiable Information) such as faces and license plates must be blurred or anonymized before or during the annotation process to meet EU and California regulations.

ISO 27001 Certification: This standard ensures that the facility handling your proprietary sensor data adheres to the strictest information security management protocols.

ISO 9001 Quality Management: Guarantees a systematic approach to quality assurance, ensuring that the "Ground Truth" data is actually true (99.5%+ accuracy rates).

secure Data Transmission

On-Premises vs. Private Cloud: High-ticket contracts often require annotators to work within VDI (Virtual Desktop Infrastructure) environments or air-gapped facilities where data never touches the open internet.

Audit Trails: Full traceability of who accessed which frame and when.

The Human-in-the-Loop (HITL) Advantage

While auto-labeling algorithms are improving, they cannot yet guarantee the safety-critical precision required for L4 deployment. The "Human-in-the-Loop" approach remains the gold standard for validation and handling complex edge cases that stump AI.

However, this human element requires more than just clicking a mouse. It requires linguistic and cultural context.

Global Context in Data Annotation

Understanding a "Stop" sign is easy. Understanding a temporary handwritten diversion sign in a specific dialect, or interpreting local traffic gestures, requires a deep understanding of localization.

This is where Artlangs Translation distinguishes itself in the data services ecosystem.

With a legacy of expertise spanning 230+ languages, Artlangs has evolved beyond traditional translation into a comprehensive language and data solutions provider. Their experience in video localization, short drama subtitling, and game localization has built a rigorous operational framework perfect for high-volume, high-precision tasks.

Artlangs leverages its massive network of native experts not just for translation, but for multilingual data annotation and transcription. Whether it is transcribing voice commands for in-cabin AI assistants across hundreds of dialects, or providing culturally context-aware image labeling, their diverse team ensures your dataset reflects the global reality your AVs will navigate.

By combining decades of linguistic precision with modern data annotation workflows, Artlangs offers the scale, security, and diversity required to move your autonomous systems from the test track to the global market.