It’s a pattern I’ve seen time and again with teams pushing machine learning into production. They invest heavily in powerful architectures, fine-tune hyperparameters for weeks, run endless ablation studies—and still the model underperforms in the real world. The issue almost always traces back to the same place: the training data isn’t clean enough. Labels are inconsistent, ambiguous cases get handled differently by different annotators, and subtle errors compound into biases or blind spots that no amount of model tweaking can fully fix.

Take object detection in autonomous driving, for example. If bounding boxes on pedestrians vary by several pixels across annotators, or if rare scenarios like low-light reflections get inconsistently tagged, the model learns noisy patterns. It might nail the easy cases in validation but fail dramatically when it matters most. The same goes for NLP tasks—sentiment labels that flip based on cultural nuance, or entity recognition where medical terms get misclassified because the annotator wasn’t domain-trained.

Studies back this up clearly. Research on popular deep learning datasets found that an average of 3.4% of labels are incorrect, and even that small fraction can destabilize benchmark results and reduce generalization. Other work shows models can tolerate some noise, but once mislabeled data climbs toward 20–40%, accuracy starts dropping sharply, sometimes by 10–20% or more depending on the task. These aren’t edge cases; they’re common enough that many production failures get blamed on “overfitting” when the root cause is upstream in the data pipeline.

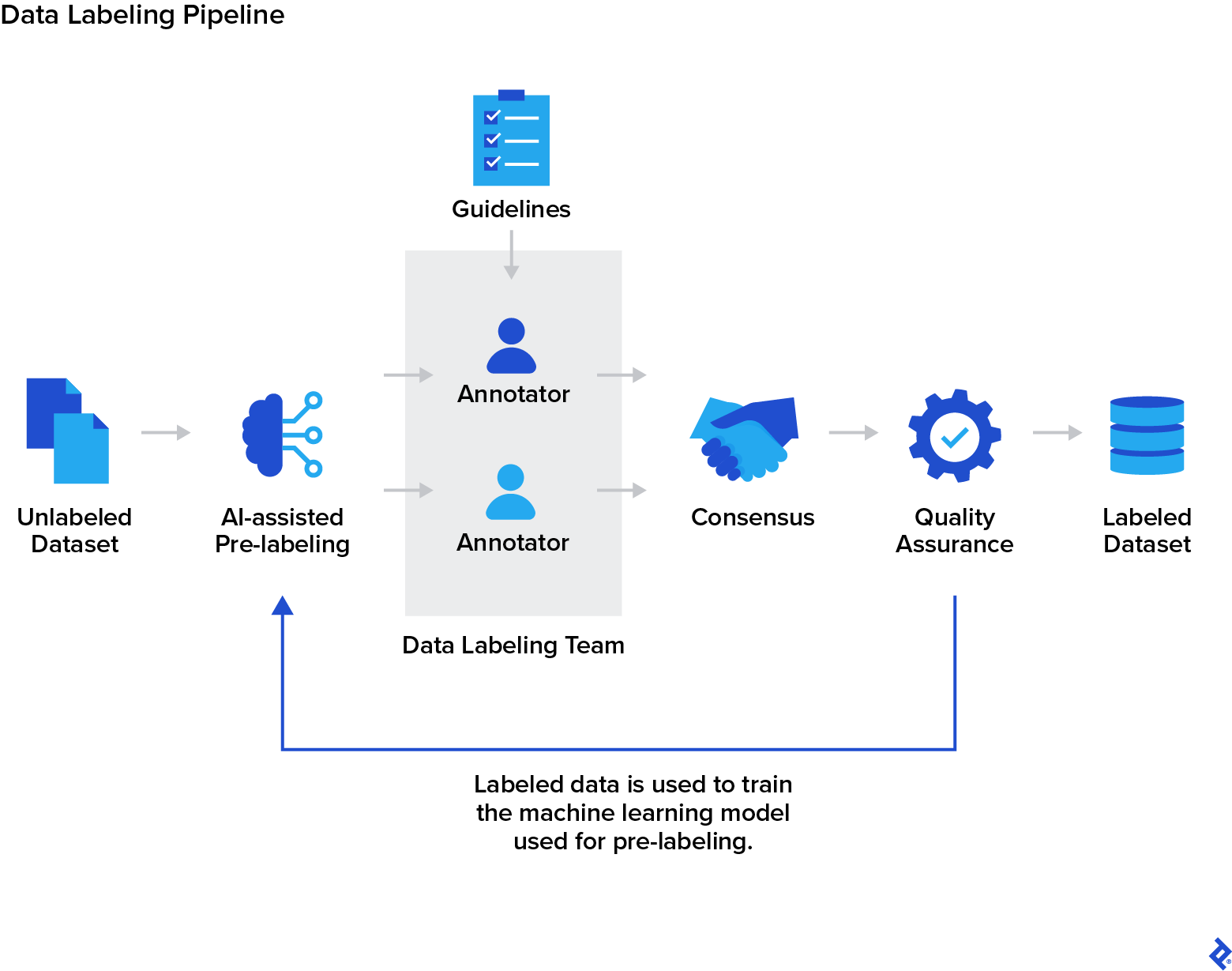

That’s exactly why demand for high-quality AI data annotation services for machine learning has surged. Internal teams rarely have the scale, specialized expertise, or rigorous quality controls needed to label millions of instances without introducing variance. Crowdsourcing platforms help with volume and cost, but they often trade off consistency unless you layer on heavy oversight—which defeats the speed advantage. Professional annotation providers fill this gap with trained experts, detailed guidelines, multi-stage reviews, consensus protocols, and active quality monitoring that keeps error rates low.

The market numbers reflect how seriously companies are taking this. The global AI annotation sector was valued at roughly USD 2 billion in 2025 and is on track to hit USD 2.5 billion or more in 2026, with long-term forecasts pointing to over USD 10–20 billion by the early 2030s at CAGRs of 26–28%. The growth is driven by industries that can’t afford sloppy data—healthcare imaging, where a mislabeled tumor can have life-or-death consequences; manufacturing defect detection; multilingual NLP for global products; and of course, self-driving tech and robotics.

What separates the good providers from the rest is how they handle complexity. They don’t just slap labels on data; they build repeatable processes with domain specialists, inter-annotator agreement checks, and iterative feedback loops. For projects crossing languages or regions, cultural and linguistic nuance becomes critical—idioms, slang, visual context that doesn’t translate directly. A service that understands those layers produces datasets that help models generalize across markets instead of failing on edge cases.

In that context, Artlangs Translation brings real depth to the table. They handle over 230 languages and have spent years specializing in translation, video localization, short drama subtitles, game localization, audiobook dubbing, and especially multilingual data annotation and transcription. Their track record includes complex, high-precision projects where accuracy and cultural fidelity were non-negotiable. When your model needs to perform reliably in diverse, real-world settings, partnering with someone who already lives in that multilingual space can make the difference between a dataset that works and one that quietly undermines everything downstream.